How many servers does Google need for it's web search? How many pages are crawled and indexed? Starting from Google's 2009 statement that it uses 1 kJ energy per search we estimate that Google used $\approx$ 130.000 servers for its search in 2008. We also speculate that Google only indexes 5% of its crawled pages.

This short article does some approximations to calculate how many servers are dedicated to Goggle search and how many queries such a "theoretical" server handles. I use the term theoretical, since Google doesn't use an universal single machine for crawling, indexing and querying, instead a query first hits the web server, which sends it to index servers and finally the answers are assmbled by doc servers, see here for details. Anyway, assuming a server uses 150 Watts power on average (which I'll motivate below), we conclude that Google used about 130,000 servers in 2008 and each server handles approx. 9 queries and crawls 54 pages per minute.

On 11.2.2009 Urs Hölzle, Senior Vice President, Operations at Google published some numbers on energy efficiency in his blog post "Powering a Google search":

Together with other work performed before your search even starts (such as building the search index) this amounts to 0.0003 kWh of energy per search, or 1 kJ.

This number will be the first source for my calculation. Next we have to find the approximate number of searches performed in 2008. At Google Search Statistics - Internet Live Stats we find numbers for Google searches per year: Approx. 600 billion searches in 2008, which is about 1.6 billion searches a day in 2008 (in 2012 Google had 1.2 trillion searches).

For 1.6 billion queries per day and 1 kJ of energy per query we get a total power consumption per day of

$$\frac{1.6\cdot 10^9}{24\text{ h}} \cdot 1\text{ kJ}= \frac{1.6\cdot 10^{12}}{86,400} \frac{\text{J}}{s} = 18.52 \cdot 10^6 \frac{\text{J}}{s} \approx 20\text{ MW}.$$

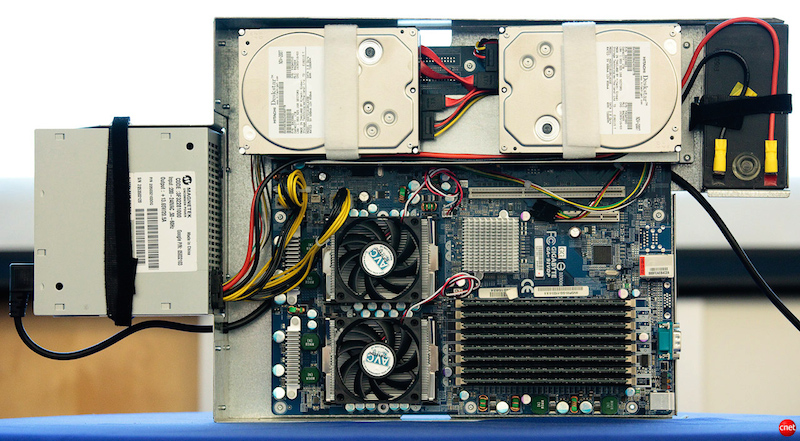

In other words the computer equipment needed to process 1.6 billion queries per day has a power consumption of approximately 20 MW. Let us assume a Google server in 2008 consumes 150 Watts on average. This assumption is motivated by a peak power consumption per server node of 215 W, which in turn is based on Stephen Shankland's April 2009 article on CNET Google uncloaks once-secret server (details on hardware can be found here).

Now it follows that Google has about 20 MW / 150 W = 130,000 servers dedicated to searching in 2008. So a single server does about 12,300 queries per day or approx. 9 queries per minute. Of course this hypothetical single serves also crawls the web, builds the index and does matrix computations for ranking (see Wikipedia: PageRank), among other things.

Nine queries per minute, doesn't seem a lot. In 2012 Internet Live stats reported (same link as above) that Google crawled 20 billion pages per day. When we scale this proportionally with the number of searches (which doubled from 2008 to 2012), we get approximately 20/2 = 10 billion crawled pages per day in 2008. This gives about 77,000 pages per machine and day or just 54 pages per minute. Since a query is handle most of the time under 200 ms (see also Powering a Google search), it seems that crawling and especially indexing dominates query processing. Let's summarize my findings:

First we must ask ourselves what is ment by "indexed". I will define a web page as indexed when it is possible to be retrieved via the search engine's interface. This should be equivalent to the number of pages within the inverted index. Unfortunately we don't get concise information from Google about their index' size. Google offical Blog: We knew the web was big (25.7.2008):

Recently, even our search engineers stopped in awe about just how big the web is these days -- when our systems that process links on the web to find new content hit a milestone: 1 trillion (as in 1,000,000,000,000) unique URLs on the web at once

We don't index every one of those trillion pages -- many of them are similar to each other, or represent auto-generated content similar to the calendar example that isn't very useful to searchers.

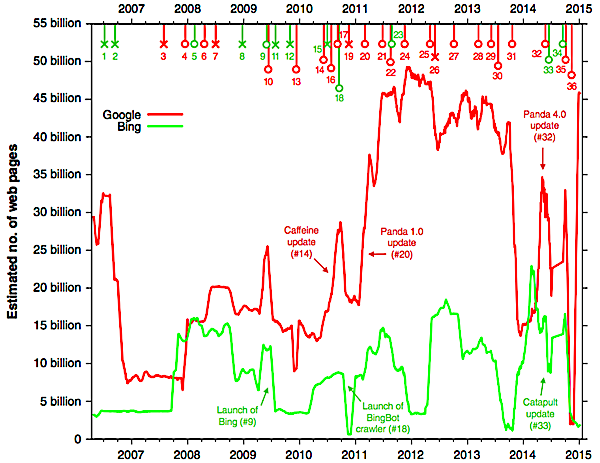

To estimate the size of Google's index I used yearly averages based on numbers from Estimating search engine index size variability: a 9-year longitudinal study, see also one of the authors' web side WorldWideWebsize. This is the only sound and comprehensible source on the size of Goggle's (and Bing's) search index I know of. Also note that Google approximately only indexes 5% of its crawled pages!

Motivated by the above study let's assume that Google indexed in 2008 approximately 15 Billion pages. This leads to the following estimate on the number of indexed web pages per machine in 2008:

$$\frac{15,000,000,000}{130,000} \approx 115,000$$